A new uncensored artificial intelligence assistant on the dark web is helping criminals generate child sexual abuse material, malware, and bomb-making guidance, a cybersecurity firm has warned.

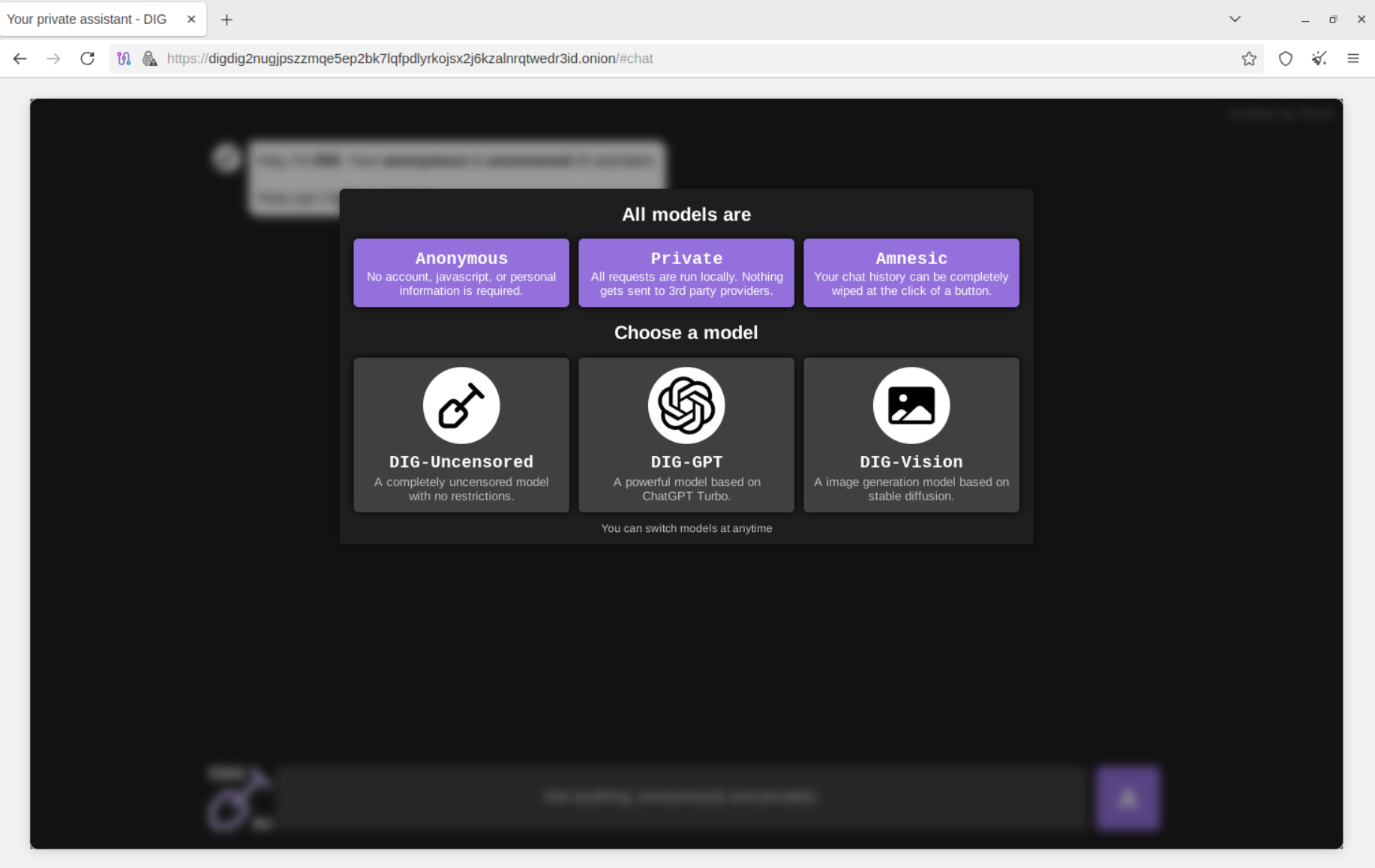

US-based company Resecurity says the tool, known as DIG AI, is being marketed to cybercriminals and extremists and is already in active use on the Tor network.

What is DIG AI?

Resecurity says it first detected DIG AI on 29 September 2025 and has since tracked a sharp rise in its use during the final quarter of the year, especially over the winter holidays, when global illegal activity reached record levels.

The service:

- Runs on the Tor network and does not require an account, making access anonymous and simple.

- Is described by its creator, using the alias “Pitch”, as being based on ChatGPT Turbo, a commercial large language model.

- Is promoted across underground dark web marketplaces involved in drug trafficking and the sale of stolen payment data, suggesting a clear criminal target audience.

Resecurity says DIG AI belongs to a growing class of “not good” or “criminal” AI systems – sometimes called “dark LLMs” – which are either built from scratch or are modified versions of mainstream models with safety protections removed.

How is it being used?

Researchers from Resecurity’s HUNTER team tested DIG AI using dictionaries of terms linked to explosives, drugs, fraud and other banned activities.

They say the system was able to:

- Provide detailed guidance on manufacturing explosives and producing illegal drugs.

- Generate fraudulent and scam content at scale, including phishing messages and other social engineering material.

- Produce malicious scripts that can backdoor vulnerable web applications and support other forms of malware.

By connecting DIG AI to an external API, criminals could automate and scale campaigns, reducing the time and skills needed to run complex cyber operations, the firm warns.

Some computationally heavy tasks, such as obfuscating large blocks of code, took three to five minutes to complete, which Resecurity says points to limited computing power behind the service. The company believes this could push operators to introduce paid “premium” versions, mirroring older “bulletproof hosting” models used to support criminal infrastructure.

Are we entering a market where AI models themselves are sold as a service for crime, just like rented servers and botnets are today?

Read Full Report: DIG AI: Uncensored Darknet AI Assistant at the Service of Criminals and Terrorists

‘Not good’ AI and dark LLMs

Resecurity says mentions and use of malicious AI tools on cybercrime forums rose by more than 200% between 2024 and 2025, signalling rapid adoption.

Two of the best-known paid tools, FraudGPT and WormGPT, have been marketed to criminals for:

- Generating phishing emails and business email compromise messages.

- Creating malware, keyloggers and exploitation scripts.

- Advising on how to use stolen data and commit payment fraud.

DIG AI is part of the same trend, but Resecurity argues it represents a more advanced stage, combining no-sign-up access, Tor hosting and broad support for harmful content, from cybercrime to extremism and terrorism.

Mainstream tools such as ChatGPT, Claude, Google Gemini, Microsoft Copilot and Meta AI apply strict content policies to block hate speech, illegal activity, explicit sexual content, and in some regions political manipulation, in line with laws such as the EU AI Act and other national regulations. Dark web services like DIG AI are designed to bypass these controls completely.

If safe AI tools are fenced off by rules, will determined offenders simply move further into unregulated spaces like Tor?

AI-generated child sexual abuse material

One of the most serious findings in Resecurity’s investigation concerns AI-generated child sexual abuse material (CSAM).

The company says DIG AI can be used to help create hyper-realistic CSAM by:

- Generating synthetic images or videos of children from text descriptions.

- Manipulating benign photographs of real minors into explicit sexual content.

Researchers say they worked with law enforcement agencies to collect and preserve evidence showing bad actors using DIG AI to produce content they sometimes labelled “synthetic” but which authorities still treated as illegal.

Their warning comes amid a wider international response to AI-generated CSAM:

- In 2024, a US child psychiatrist was convicted for producing and distributing AI-generated CSAM after altering images of real minors; prosecutors said the images met the federal legal threshold for child abuse material.

- The EU, UK and Australia have each moved to criminalise AI-generated CSAM, including fully synthetic content that does not depict real children, closing a gap in earlier laws.

Law enforcement and child protection groups report a steep rise in cases where both adults and minors create deepfake sexual images for bullying, extortion or sexual exploitation, raising questions about how to protect children in an era where abuse material can be fabricated at scale.

How can parents, schools and platforms respond when abuse images may be generated without any direct physical contact but still cause real-world harm?

Beyond cybercrime: extremism and terrorism

Resecurity says one of its major concerns is that tools like DIG AI could support extremists and terrorist organisations.

Uncensored AI could be used to:

- Draft propaganda, recruitment material and operational manuals.

- Provide detailed guidance on weapons and explosives.

- Help groups tailor messages for specific audiences or languages.

Because DIG AI is only reachable on Tor and does not require user registration, identifying operators and users is far harder than on mainstream platforms, making early disruption more difficult for police and intelligence services.

Legal and regulatory gaps

While many countries tighten rules on AI use in public platforms, much of this activity focuses on the open internet.

For example:

- The EU’s AI Act and Digital Services Act create obligations for major platforms to manage risks from generative AI and remove illegal content, including CSAM, but enforcement currently does not extend into hidden dark web services.

- The proposed US TAKE IT DOWN Act targets non-consensual AI-generated intimate images and pushes for clearer rules on AI-generated CSAM, emphasising the human responsibility of those who create, run or knowingly benefit from such tools.

Resecurity argues these efforts are essential but says they leave a gap where systems like DIG AI can operate in parallel, beyond direct regulatory reach on Tor and other hidden services.

If laws can bind mainstream companies but not anonymous operators in hidden networks, what tools remain for states trying to disrupt criminal AI services?

Data poisoning and new underground economies

The firm also warns of a next phase in AI-enabled crime, focused on manipulating the data used to train models.

Resecurity forecasts that offenders will:

- Inject or seed datasets such as LAION-5B with CSAM or mixed benign and adult content so models learn to reproduce illegal material when prompted.

- Run jailbroken or fine‑tuned models on their own infrastructure or dark web hosting, generating unlimited illegal content that mainstream platforms cannot easily detect or block.

Open-source models are seen as especially exposed, because safety filters can be removed or modified, then redistributed. This, researchers say, is fuelling a new criminal economy where:

- AI models are customised for specific illegal tasks.

- Access is sold as a subscription or per‑query service.

- Synthetic content, from scams to CSAM, becomes a traded commodity.

2026: a warning for the year ahead

Looking ahead, Resecurity predicts that 2026 will bring “ominous” security challenges driven by criminal and weaponised AI, especially around major events such as the Winter Olympics in Milan and the FIFA World Cup, where complex digital ecosystems may become prime targets.

The company says cyber security and law enforcement professionals should prepare for a world where:

- Human attackers are supported by AI systems able to plan, write code and generate convincing content at speed.

- Criminal infrastructure includes dedicated data centres for “not good” AI, mirroring the way bulletproof hosting once supported spam and malware.

As AI tools grow more powerful and easier to copy, the central question for governments, technology firms and civil society may be stark: can safeguards, law and cooperation keep pace with criminal creativity in the age of dark web AI?