SINGAPORE: As Singapore prepares for a new wave of AI-generated deception, a government-backed research centre is racing to build tools that can authenticate digital content at scale — and to teach the public what’s at stake when even human intuition can no longer separate real from fake.

A Nation Confronts the Limits of Human Judgment

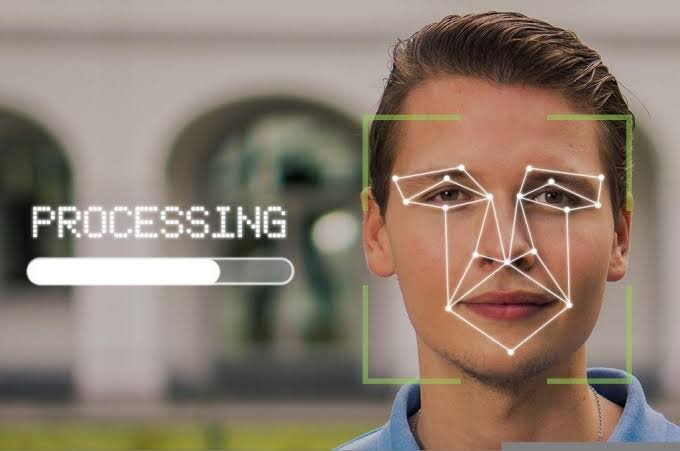

The Centre for Advanced Technologies in Online Safety, or CATOS, was created in 2024 with a daunting mandate: help Singapore navigate an information ecosystem where authenticity is no longer guaranteed. With AI tools capable of producing near-perfect fabricated videos and audio, the researchers here believe traditional instincts once relied on to detect digital manipulation can no longer keep pace.

“Unnatural blinking and blurring of the mouth might have worked in the past,” said Dr. Yang Yinping, director of CATOS and senior principal scientist at A*STAR’s Institute of High Performance Computing. “But today, even the world’s best tools cannot consistently detect these very realistic deepfakes.”

To her, the challenge is fundamentally social as much as technological. People, she argues, must learn to verify sources rather than trust what their eyes perceive. And, equally important, they must have “a trusted adult” a person or institution to turn to when the real and fake blur.

The centre is backed by a five-year, US$50-million( ₹4,456 crores) investment under Singapore’s Research, Innovation and Enterprise 2025 Plan, signalling the urgency of building resilience before the next major wave of misinformation strikes.

Algoritha Prepares You for Seamless DPDP Compliance — Contact Us for Complete Implementation Support

Building Tools for an Era of Synthetic Media

CATOS’ most ambitious project is Provo, a free online tool slated for launch in 2026. The platform allows creators, newsrooms and public agencies to embed cryptographic provenance labels into photos and videos metadata that functions like nutrition labels on food products. Anyone using the Provo browser extension can inspect the embedded information: the original publisher, the date of first posting, and whether the file has been edited. If a photo or video is altered without authorisation, the authenticity labels disappear automatically.

Provo is being developed in collaboration with Adobe, following a 2024 agreement that brought content-provenance standards to Singapore. The system may eventually integrate Singpass-based identity verification, making it harder for bad actors to impersonate institutions or individuals.

“We see Provo as a hope,” Dr. Yang said, “that together with deepfake detection technology, these tools can contribute to maintaining information integrity on the internet.”

Alongside Provo, CATOS has built Sleuth, a deepfake-recognition platform already used internally by several public agencies and media groups. Sleuth analyses pixel-level anomalies — the invisible seams left behind by generative models — and performs frame-by-frame video inspection to flag manipulated faces, audio or environments.

The team of roughly 50 scientists is preparing Sleuth for eventual public release. But even at a claimed 99 percent accuracy, principal scientist Soumik Mondal expressed caution:

“That 1 percent that Sleuth gets wrong can be catastrophic if we roll it out to everyone in Singapore.”

The Search for a Trust Ecosystem

CATOS researchers describe the fight against digital manipulation as a “never-ending cat-and-mouse game.” Detection technologies advance, but so do the algorithms that defeat them. That reality has pushed the centre to think beyond technical fixes and toward cultivating a broader ecosystem of trust.

Provo’s labels, for example, are designed to remain intact even when content is reposted or screen-captured, giving users a persistent thread back to the original source. The team is now in discussions with local content creators, publishers and platform operators to encourage widespread adoption ahead of the official launch. Trust, however, cannot be encoded solely in metadata. Dr. Yang argues that interpersonal relationships and community norms still matter.

“Individually, we cannot make 100 percent accurate judgments,” she said. “But with good relationships, we can at least communicate with someone we trust.”

Preparing for the Next Wave of Information Warfare

Even as Provo and Sleuth inch toward public deployment, CATOS officials remain acutely aware of the stakes. Singapore, with its dense digital infrastructure and high social-media penetration, is particularly vulnerable to coordinated misinformation campaigns. A single convincing deepfake, experts warn, could spark panic, manipulate markets or destabilise political discourse.

Content-provenance tools have been slow to gain traction globally, but CATOS researchers see potential for the technology to reshape how information is presented — and trusted — in the region. If adopted widely, provenance labels may become as routine as timestamps or bylines. For now, though, the work continues against a backdrop of rising synthetic media and growing uncertainty.

“We need infrastructure that can handle the sheer volume of content in a population of five million,” Dr. Mondal said. “And we need a public that understands both the power and the limits of these tools.”