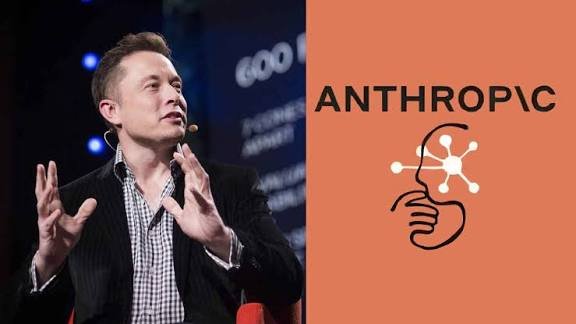

New Delhi/San Francisco: The global artificial intelligence race has moved beyond technology and capital into a phase of public sparring and ethical scrutiny. Tesla and xAI chief Elon Musk has labelled Anthropic’s AI chatbot Claude “misanthropic”, accusing it of bias against certain demographic groups. The remarks came days after Anthropic announced a ₹2.49 lakh crore ($30 billion) Series G funding round that lifted its valuation to about ₹31.5 lakh crore ($380 billion), placing it among the world’s most valuable AI firms.

Posting on X, Musk alleged that Claude shows unfavourable tendencies toward whites and Asians, particularly Chinese users, as well as heterosexuals and men, and said the issue needed fixing. The comment has triggered a fresh debate across the tech ecosystem on algorithmic bias, safety guardrails and the methods used to train large language models.

Anthropic has not issued an official response so far. The company has consistently positioned itself as a “safety-first AI” developer, emphasising controlled outputs and risk mitigation. That positioning has now come under sharper examination, with the controversy being seen not merely as a social media exchange but as part of a broader conversation about accountability and neutrality in AI systems.

Certified Cyber Crime Investigator Course Launched by Centre for Police Technology

Funding reshapes competitive dynamics

The latest funding round was led by Singapore’s sovereign wealth fund GIC and investment firm Coatue, with participation from Dragoneer, Founders Fund, ICONIQ, MGX and existing backers including Microsoft and Nvidia. The capital infusion significantly strengthens Anthropic’s compute and research capabilities and intensifies competition with OpenAI, Google and Musk’s own xAI.

Industry analysts say mega funding rounds do more than expand balance sheets—they reshape market hierarchy and raise expectations around performance, governance and safety. In that context, public criticism from rivals can serve both as a technical challenge and a strategic signal to investors and customers.

Divided response online

Reaction on social media has been mixed. Some users claimed they had encountered overly cautious or filtered responses from Claude that felt unbalanced, while others countered that Musk’s Grok chatbot has faced its own questions around factual accuracy and output quality.

The exchange has revived a familiar fault line in AI development: the tension between bias mitigation and safety constraints. Researchers note that models designed to avoid harmful content often adopt conservative response patterns, which some users interpret as ideological bias. Companies, however, frame such behaviour as necessary to prevent misuse and legal risk.

Earlier concerns and safeguards

Previous reports indicated that Claude had blocked certain requests linked to Chinese firms and issued warnings about potential cyber misuse. Anthropic has argued that layered safeguards are essential to reduce the risk of malicious applications, particularly in areas such as malware generation and sensitive data handling.

Musk, a long-time advocate of stronger AI oversight, has repeatedly warned about systemic risks while simultaneously building competing models through xAI. His latest remarks are therefore being read through both a technical lens and a competitive one, reflecting the increasingly high-stakes nature of the sector.

Power, valuation and regulation

With AI firms commanding multi-lakh-crore valuations and controlling vast datasets and compute infrastructure, questions of bias, transparency and auditability are becoming central to their credibility. Experts say independent benchmarking, third-party audits and clearer regulatory frameworks will be critical to objectively assess claims of bias and safety.

For now, Anthropic’s silence and Musk’s sharp critique have added a new layer to an already intense AI rivalry.